An attorney outlines the main risks for offsite manufacturers and offers advice on how to mitigate them.

- Contracts tend to shield robot manufacturers from any liability beyond the cost of the robot, which can leave users on the hook for large dollar amounts.

- Even if the liability shield is negotiated out, the manufacturer can still claim human error. To counter this, the user needs an effective, well-documented training program.

- AI programs that are used for design can steal copyrighted material without attribution. They must be programmed in a way that prevents that.

Ronald Ciotti is a Partner at Hinkley Allen, a law firm with eight US offices. For more than 20 years, his legal practice has focused solely on construction, including modular manufacturers.

Ciotti is very involved in the industry. He’s on the board of the Associated General Contractors of America, a member of its Building Division Leadership Council, Chair of its Contract Documents Forum, and Chair of its Prefabrication Working Group, as well as a National Director on the Associated Builders and Contractors’ National Board of Directors. We talked with him about the legal risks from incorporating robots and AI [artificial intelligence] into an offsite manufacturer’s operations. We also asked how those risks can be mitigated.

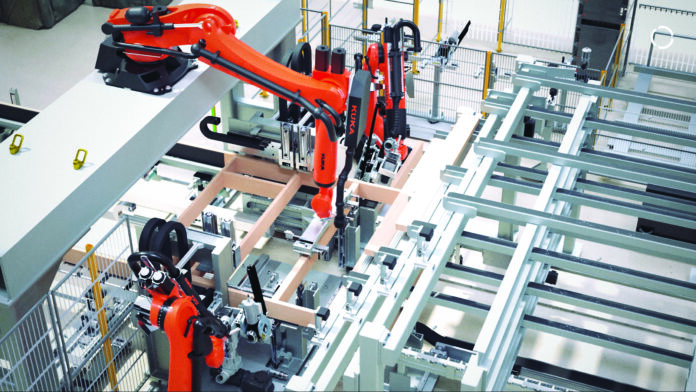

Credit: KUKA Group

The Main Liabilities

Robotics play many roles in offsite construction. They’re not only used in various manufacturing processes — such as nailing OSB sheathing to wall studs, but also for moving materials around inside a factory and using drones to observe on-site components being set.

When it comes to legal issues, the main problem (from the manufacturer’s perspective) is that when a manufacturer buys a robot, the contract typically includes “a limitation of liability [for the supplier], usually restricted to the price of the robot or less,” Ciotti says. If the robot malfunctions and “does something that creates a mistake in production, the manufacturer can be significantly legally exposed.”

For example, suppose a robot misaligns a screw. “That small error can create massive problems down the road. Usually, if the robot is off once, it’s off for all the production.” That is, it can make the same mistake dozens, hundreds, or even thousands of times. And that mistake can cause further problems in the construction sequence.

Ultimately, this could create a financial burden to rectify or, worse, could compromise the integrity of a finished building. But because the robot supplier specified their limited liability in the contract, the manufacturer is vulnerable to those risks and liable for those costs — even if the robot they bought was faulty.

Ciotti says too many companies find a great robot and negotiate a good price, then neglect to actually read the contract, let alone negotiate it. “It does happen that some robotics companies will take responsibility for problems caused by their robots. You just have to negotiate with each company,” Ciotti says. If the offsite manufacturer is unwilling to negotiate the limited liability, then the purchaser “either needs to decide not to buy the robot or, at a minimum, to buy it knowing the significant risks they’re undertaking by doing so.”

Even if a manufacturer does manage to negotiate the robot supplier’s limited liability, there’s another problem: The robotics company can blame problems on human error, rather than acknowledging that the robot they sold was at fault.

Ciotti says, “Some of my [offsite manufacturer] clients didn’t have proper policies and instructions beyond just giving employees the instruction manual on the general use of these robotics — which can be hundreds and hundreds of pages long.”

In addition, the manufacturing companies he has dealt with don’t always train employees on how to use and supervise those robots for the specific processes in their specific manufacturing plant. “They basically say [to their employees], ‘Here’s the instruction manual, off you go.’ That’s not good enough to prove their employees were educated and knew how to use the robots.” It’s also not enough to prove that a mistake was not due to human error.

If the manufacturer has an official policy on how employees are trained and can demonstrate that the policy was followed, they should be less vulnerable to a robot supplier’s charge of human error, Ciotti explains. “The plant needs to generate very simple instructions so that each employee knows exactly how to use and supervise the robot on their specific line,” he says. For example, “Employees should know that the robot has a vertical swing of a specific distance and it’s supposed to have five nail guns that attach fasteners at these specific locations.”

If a robot does something it’s not supposed to, the goal, Ciotti says, is to shift the responsibility to the correct party — the robot manufacturer, or supplier.

Another area of legal risk is injuries caused by robots. “Imagine the example of a robot that’s supposed to swing 90 degrees from 12 o’clock to 3 o’clock, but out of the blue it malfunctions and swings 180 degrees from 12 o’clock to 6 o’clock — and hits a visitor to the plant and injures them,” Ciotti says. “Obviously, you want the injured party to be able to sue the robot company, not the offsite manufacturer.”

Again, the manufacturer would provide itself with legal protection from the above by documenting that it had properly trained employees on how to use the robots — so that the manufacturer/supplier would be less able to successfully claim human error.

Credit: KUKA Group

Hacker Attacks

Another risk is that robots can be hacked. “Manufacturers need to make sure that their robotics are tied into their cybersecurity system to protect proprietary information relating to the processes they’re using that robot for,” Ciotti says.

Vimal Buck is the Director of Industrial Cybersecurity in the Center for Design and Manufacturing Excellence at Ohio State University. He says that, for relatively unsophisticated small and medium-sized manufacturers, the operational technology (OT) that controls robots and the IT networks where financial data is stored, may be on the same system.

“Setting up robots on different networks (OT vs IT) is an easy way to protect yourself from harm,” Buck says. “So is not using the same credentials or passwords for the IT network and the OT network.”

Another way to enhance security is with an ‘air gap’ between the OT and IT systems. An air gap involves physically isolating a computer or network, so it can’t connect — via wires or wirelessly — with any other computers or network devices. To transfer data between two systems that are isolated from each other, a person would have to physically move data on a flash drive.

An air-gapped computer can also be used to back up and then restore data if another system is hacked. (It’s still possible for hackers to attack an air-gapped system, however. The system can have ‘electromagnetic leakage’ and hackers can analyze the emitted waveforms and use that information to attack the system.)

In addition to stealing information and assets, hackers can also make robots malfunction, or shut them down until a ransom is paid. Worse, “a hacked robot could easily cause physical harm. An industrial robot with a payload of several hundred kilograms in a collision with a human would cause severe injury or death,” Buck says.

An additional possible danger is that hacked robots “could be used to make components that fail quicker,” Buck says, potentially resulting in less safe buildings — or at least ones that require more maintenance and could harm the manufacturer’s reputation.

In addition to separating IT and OT systems, and air gapping, here are some other things Buck says manufacturers can do to increase their cybersecurity:

- Use low-cost sensors to continually verify things like power draw, robot positioning, etc.

- Keep firmware up to date.

- Use Hyperledger fabric (a blockchain-based technology) to move proprietary data securely across IT and OT systems.

- Employ Zero Trust security, which forces users and devices to be repeatedly re-verified. (This contrasts with castle-and-moat security where everyone who’s gained access to the system is trusted once they’re inside it.)

- Require two-factor authentication to log into any system the factory uses.

- Maintain printed plans for recovery and continuity of operations.

“Companies have to treat cybersecurity similar to how they treat safety,” he says. “It’s not the job of any one consultant or expert, but a shared responsibility.”

Credit: KUKA Group

Potential Copyright Issues

Companies use AI for various purposes, including analyzing data from wearable devices. “The AI can tell from Joe’s wearable that he’s over-exerting himself and that he ought to take a break or that he’s at risk of injury. That’s great information and a worthy purpose,” Ciotti says. “But it’s also an incredible invasion of privacy, and potentially violates employees’ HIPAA rights.” (HIPAA is the Health Insurance Portability and Accountability Act, a US federal law that protects patients’ sensitive health information.)

Similarly, other wearables such as vests and helmets can track employees within a manufacturing plant or on a construction site, which also raises privacy concerns. “Manufacturing companies need to have policies regarding these AI-enabled devices and make sure they have sign-off waivers from employees,” to reduce the risk of an employee suing the manufacturing company over breaches of privacy. The same goes for facial recognition technology, which “some companies use to allow access to computer systems, just as many of us use it to access our iPhones.”

However, the AI use that Ciotti says “keeps me up at night” is for design. He says, “Companies that don’t have a significant architecture and engineering department, but who provide design work, sometimes use AI to create designs.”

And it’s not just conceptual drawings they’re using AI for. Ciotti says, “I’ve seen AI used for very technical design work. People are asking it to design walls with electrical conduits and plumbing so there aren’t any MEP conflicts, for example.” Or they’re asking it to design a four-bedroom, two-bathroom modular home with modules of a specific size.

The problem is, as Ciotti puts it, “Just because something is out there for free, that doesn’t mean it’s free of rights.” In other words, to create its designs, AI might search the web and use any information it finds — which may or may not be copyrighted.

Usually, the AI doesn’t tell the user what its sources were. “It may have combined bits and pieces of a thousand different public domain designs and come up with something that counts as new. Or it may have found the perfect design, given the user’s specifications, that happens to be designed by a specific architect — who might have a copyright on that design,” Ciotti says. “All of a sudden, there’s a significant issue.” And, because the AI doesn’t tell the user its sources, the user may be completely unaware of the issue until they get a letter from the architect’s lawyer.

Ciotti says that if people in the industry want to use AI for design, “they have to give the AI a dataset to use that they know is free of copyright issues” otherwise they remain vulnerable to copyright infringement.

Credit: Wienerberger AG

Zena Ryder writes about construction and robotics for businesses, magazines, and websites. Find her at zenafreelancewriter.com.